🏷️ €19900 | Base Price

MX-AI License including 1 year of updates and support 🔹

+€4400 for 1 additional year of updates and support 🔹

+€9900 for 3 additional year of updates and support 🔹

Contact us Contact us for academic pricing or tailored packages.

-21fac8?logo=chatbot&logoColor=white)

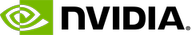

MX-AI is BubbleRAN’s automation and intelligence platform that brings autonomous operations and optimization to 5G/6G networks from lab to production. It implements both AI-for-RAN and AI-on-RAN services through a Multi-Agent AIFabric, which orchestrates collaborative AI agents to achieve closed-loop automation. This turns network observability into insight, and insight into a set of actions. The platform is powered by the BubbleRAN Agentic Toolkit (BAT), which provides ready-to-use AI-RAN DevKits and a catalog of reusable and extensible AIFabrics.

💡 Getting started is simple: choose or customize an AIFabric blueprint from our portfolio, and deploy it with a single command:

bash$ brc install aifabric myfabric.yaml

📄 MX-AI Data Sheet | 💡 MX-AI Walkthrough | ▶️ MX-AI Webinar

What’s Included?

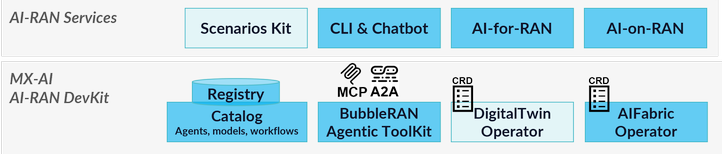

- Multi-Agent AIFabric: Cloud Native multi-agent runtime, Agent-to-Agent communication with A2A, exposure of algorithms, datasets and ML models with MCP

- Agents Catalog: SMO Agent, Configuration Agent, UL Anomaly Detection Agent, API Agent, Supervisor Agent

- BubbleRAN Agentic Toolkit (BAT): Agents Development Kit, CLI tool to create, build, benchmark and publish agents

- MX-HUB Access: Pull/Push Agents, LLMs, ML models, Datasets and other artifacts (with versioning)

- Web GUI, CLI & Chat: User-friendly UI for chatting with the Agents

- Training Materials: Access to Lab instructions, code samples, and tutorials.

🚀 Add-on Specialized Agent

Tailored AI-agent development is available as an add-on service. Contact us for pricing and delivery of a personalized agentic solution.

Below is a summarized overview of our Agents and MCP Servers Catalog. For more details, visit our Open Documentation.

🟢 AVAILABLE 🟣 EXPERIMENTAL 🟡 PLANNED

| Category | Status / Agent | Description |

|---|---|---|

| Infra / Lifecycle | 🟢 NMS/SMO | Network Management and Operations |

| 🟢 K8s API | Convert intents from human language to API calls | |

| 🟡 RIC | Enforce policies and/or deploy xApps/rApps | |

| 🟡 Kubernetes | Cluster health and resolution | |

| Observability | 🟡 Digital Twin | What-if Analysis, validate configurations |

| 🟡 Data Collection | Dynamic Stats/KPIs/Logs collection | |

| Compliance | 🟡 Specification | 3GPP/O-RAN Q&A |

| 🟡 Judicial | Detect malicious or unauthorized behavior | |

| Multi-Agent | 🟢 Supervisor | Orchestrate multiple agents, delegate subtasks |

| 🟡 Mediation | Symbiotic mediator for multi-agent negotiations | |

| Predictive & Ops | 🟡 PM | Control Performance based on the SLA |

| 🟣 UL Anomaly Detection | Process Stats/KPIs/logs, spot UL interference | |

| 🟣 P0-Config | Control P0 Nominal to optimize throughput |

| Category | Status / MCP Server | Description |

|---|---|---|

| Observability | 🟢 Observability DB | Support SMO Agent’s RAG with similarity search and enumeration tools |

| 🟢 Anomalies DB | Store Anomaly reports for UL Anomaly Detection Agent |

Multi-Model Support

MX-AI provides support for several language models providers.

- We recommend a minimum of 16 GB of GPU memory to deploy local SLMs/LLMs. High-scale deployment may need A100/H100 GPUs.

- Logos are trademarks of their respective owners and used here for identification only.

- Support for other model providers has not been tested yet but can be provided based on your specific needs.

Multi-Agent AIFabric Example

|

|

Mx-AI Benefits at a Glance

| # | Benefit | Description |

|---|---|---|

| 1 | Built for O-RAN & AI-RAN | Aligned with O-RAN and AI-RAN standards and interfaces. |

| 2 | On-prem, sovereign AI | Run compact (5–30 GB) language models locally — data never leaves the network. |

| 3 | Agent-ready by design | Native support for A2A and MCP via BAT. |

| 4 | Test before deploying | Validate changes in a digital twin, deploy only if better. |

| 5 | Open AI Marketplace | Share and reuse Agents, MCP Servers, ML models, rApps, and datasets via MX-HUB. |

Practical Use-Cases

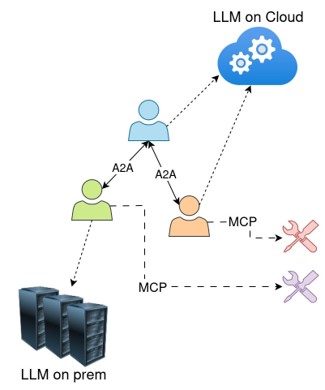

Optimize RAN Configuration

- Observability rApp: collect RAN KPIs.

- ML Model: use KPIs and current configuration to predict a tentative improved configuration.

- Configuration Agent: access the rApp and ML Model with MCP to satisfy user requests. Enforce new configurations.

- Example query: Propose a new P0 nominal value to maximize the uplink throughput of the RAN node

gnb1.

SLA Enforcement

- SLA rApp/xApp: controls the RAN PRB in a closed-loop fashion to create a network slice offering a certain DL/UL throughput.

- SLA Agent: access the SLA rApp with MCP to translate high-level intents into requests for the SLA rApp.

- Example query: Create a new network slice that allows me to watch videos in 4K.

Uplink Anomaly Detection

- Observability rApp: collect RAN KPIs.

- ML Model: use KPIs time-series to detect interference in uplink. Generate anomaly reports.

- UL AD Agent: retrieve anomaly reports and provide a tentative root-cause analysis.

- Example query: Did you detect any malfunctioning between 3AM and 5AM?.

Ready to test?

bash$ brc install aifabric tutorial-agent.yaml

- BubbleRAN Agentic Toolkit

- MX-AI Agents Training - Tutorial in our Open Documentation

- BubbleRAN Command Line (

brc) - Tutorial in our Open Documentation - Would you like to talk with our team? Book a live demo

Need more information?

Check our frequently asked questions about MX-AI to learn more and get quick replies.

BubbleRAN also offers tailored AI-agent development as an add-on service. Whether you need a specialized agent or want to extend the MX-AI platform with custom capabilities, our team can work with you to define the technical specifications and deliver a personalized solution. Contact us to discuss your needs and explore the details.

To answer your unique deployment and projects needs, we can plan a live demo, help you forward with a requirements questionnaire, and connect you with our partner ecosystem (universities, system integrators, cloud providers). 📧 contact@bubbleran.com

Frequently Asked Questions

1️⃣ Can MX-AI run fully on-prem?

Yes. SLM-first deployments run on modest edge GPUs. You can burst to larger GPUs when needed.

2️⃣ Do you integrate with our SMO/RIC?

MX-AI is O-RAN-friendly (R1/A1) and offers REST/gRPC adapters for common SMO/RIC NBI.

3️⃣ How do we publish and reuse agents?

Via MX-HUB. Pull/push agents, datasets, and tuned models with versioning and fork/merge workflows.

4️⃣ What hardware do we need?

MX-AI runs great on a single 16–24 GB GPU.

For training or large-scale inference, use A100/H100 class GPUs.

5️⃣ What if I don’t have my own GPU ?

You need to purchase an API key from an external provider, as this is not included by default.

6️⃣ How is data privacy handled?

Data stays local in sovereign mode.

We support audit logging, role-based access, and optional federated learning.

No telemetry leaves your site unless you opt-in.

7️⃣ Why MX-AI ?

- Tame 5G/6G complexity - Autonomously orchestrate multi-vendor RAN/Core with closed loops.

- Proactive operations - Agents detect anomalies, predict faults, and cut MTTR from hours to minutes.

- Sovereign by design - Run SLMs (5–20 GB) fully on-prem/edge; burst to large GPUs only when needed.

- Digital-twin safeguard - Test policies in MX-DT and push only if better (“apply-if-better” gates).

- One hub for reuse - Share xApps/rApps, agents, and tuned models via MX-HUB, no lock-in.